Does your benchmark have a witness post?

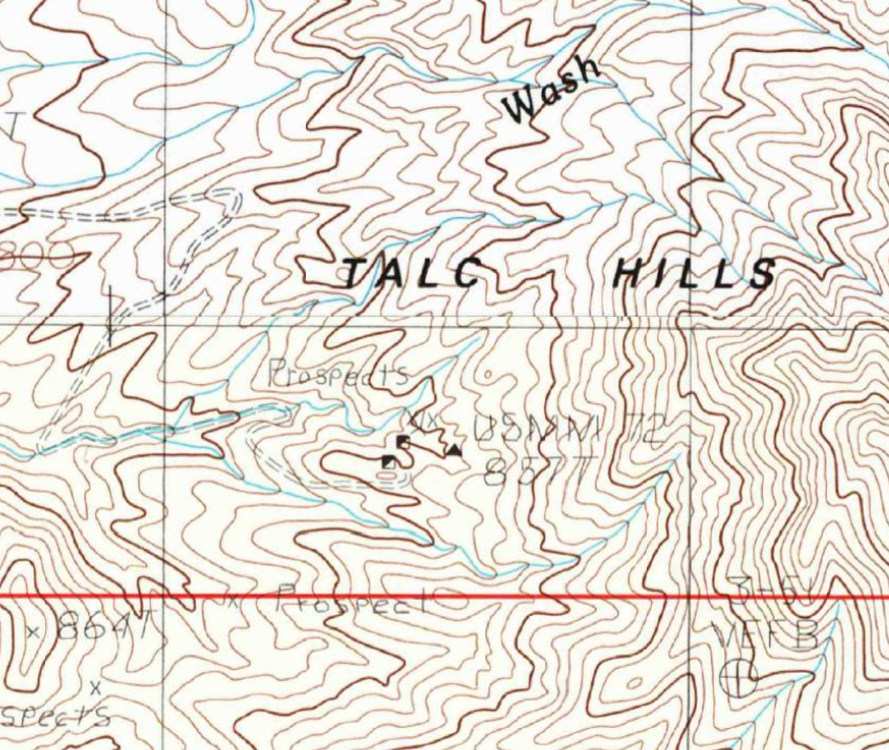

Not far from the crumbling remains of the Confidence Mine, I wandered through the sunbaked emptiness of Death Valley. The wind moved through the canyons, whispering like it had stories to tell—maybe about the miners who once chased their fortunes here, maybe just about the heat. I wasn’t looking for gold, just a piece of history, something left behind. After a few hours of picking my way through the barren Talc Hills, I crested a ridge, and there it was: USLM 72.

At first glance, it isn't much to look at, just a weather-beaten wooden post, leaning ever so slightly, standing atop a five-foot pile of hand-stacked rocks. A cairn, anchoring it against time, erosion, flash floods, and the relentless winds. It’s the kind of thing you’d miss if you weren’t paying attention but it's a relic with a fascinating story to tell. For those who know what they’re looking at, these old United States Location Monuments (USLMs) are more than just curiosities, they anchor certainty onto a landscape known for its uncertainty.

USLM 72 in real life and as indicated on the USGS topographic map as a triangle. It's actually labeled USMM because when it was first located (look closely in the photograph for an older wooden post down to the left) it was a United States Mining Monument but it's since changed to a United States Location Monument.

A hundred years ago, when the west was more empty than full, surveyors and miners drove their markers into the dirt, staking claims and carving order out of wilderness. The desert doesn’t care much for permanence, though. Rivers go dry, mountains crumble, and trails vanish under shifting sand. But a well-placed benchmark, if someone bothers to maintain it, can hold its ground. It’s a promise that something, at least one thing, stays put and can be trusted. Without it, the maps stop making sense, property lines turn into arguments, and before you know it, you’re just another lost soul staring at the horizon, trying to remember which way was home.

That’s the lesson that stuck with me as I stood there under the blazing desert sun. In a world of ever-changing landscapes, whether physical or digital, benchmarks are more than just historical artifacts. They’re tools for survival.

In cybersecurity, we face a similar kind of uncertainty. Threats evolve at a relentless pace, much like the shifting sands of the Mojave. New vulnerabilities are discovered, new attackers emerge, and what worked yesterday may no longer work today. Just as those old miners couldn’t afford to rely on a faulty map, organizations can’t afford to rely on outdated or poorly chosen cybersecurity benchmarks.

We stole the term benchmark from the physical world, stuffed it into Business Jargon 101, and then promptly forgot where it came from. That’s a shame, and something I intend to fix with this post. The connection has always seemed obvious to me. Surveyors and business leaders alike use benchmarks to navigate uncertainty. Both know that conditions change, and if you don’t adapt, you’ll find yourself lost. And both understand a hard truth: a benchmark that’s ignored or forgotten is just a pile of rocks, useless at best, dangerous at worst.

So, in this post, I’ll take you on a brief journey through the history of survey benchmarks and their role in taming the untamed, while charting a course to how cybersecurity benchmarks guide us through the digital desert. Whether you’re navigating forlorn trails of the past or defending your network from unseen threats, the right benchmarks, and the discipline to maintain them, are what keep you from getting lost.

What’s a Benchmark, Anyway?

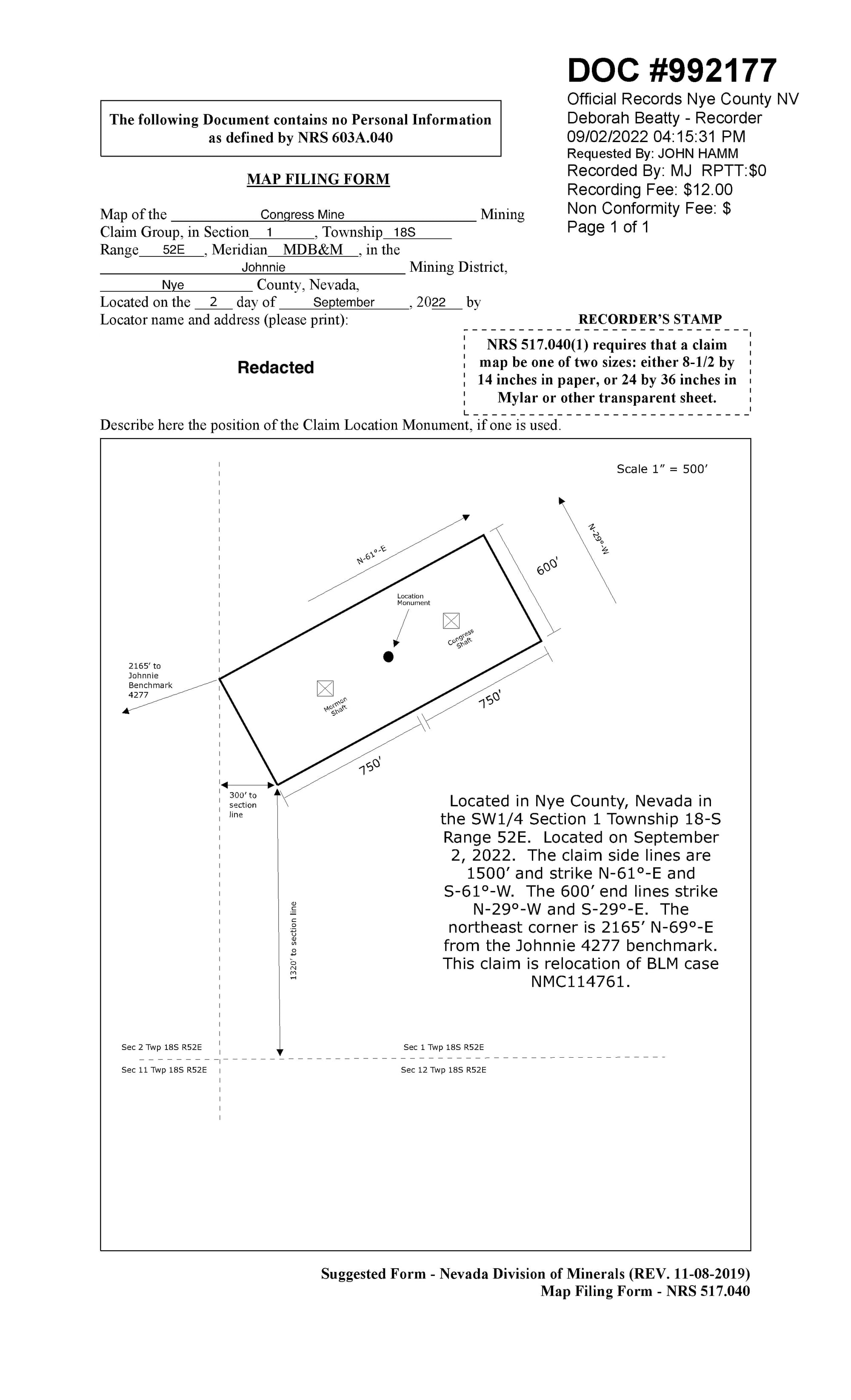

In the early days of the United States, land surveying was a fundamental tool for expansion, settlement, and infrastructure development. As settlers moved westward, precise land measurements became essential for property rights, taxation, and infrastructure projects like roads and railroads. The earliest benchmarks in the U.S. were simple markers often wooden stakes, stone cairns, or chiseled marks on prominent rock formations, placed by local surveyors to establish property lines and reference points.

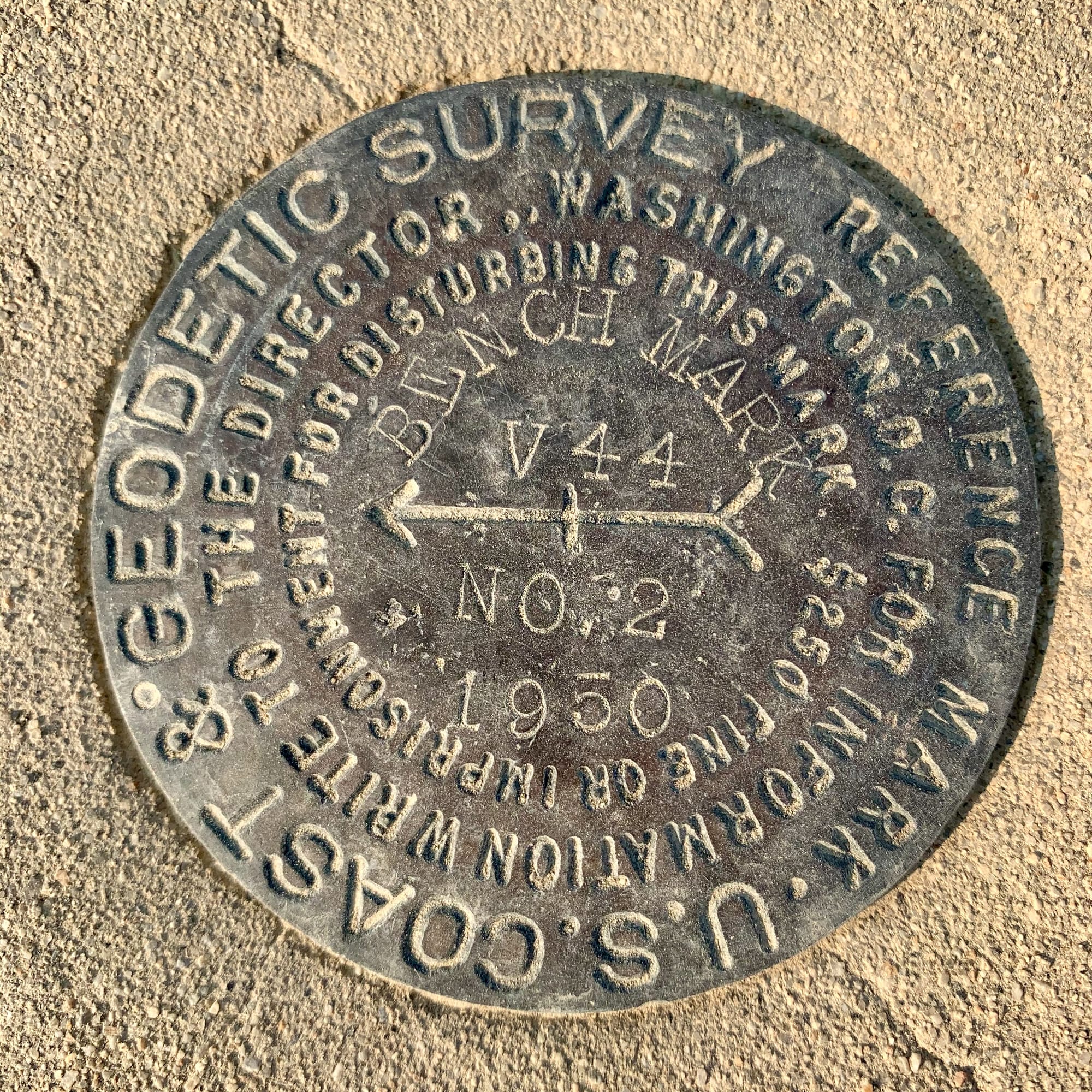

However, as the country grew, so did the need for a standardized and scientifically rigorous system of measurement. This led to the founding of the United States Coast and Geodetic Survey (USC&GS) in 1807, one of the nation’s earliest scientific agencies. The goal was to create a nationwide geodetic network, a fixed coordinate system that could be relied upon for everything from land division to navigation. The earliest formal benchmarks placed under this system were primarily along the eastern seaboard, used for coastal mapping and navigation.

The real transformation came in the 1920s, when the United States embarked on a massive nationwide geodetic survey effort. This was the first great push to create an interconnected, continent-wide framework of benchmarks, designed to improve precision in mapping and engineering projects. The USC&GS (now known as the National Geodetic Survey) set tens of thousands of permanent benchmarks, often in remote and rugged locations. These benchmarks were typically metal disks embedded in concrete or stone, stamped with unique identification numbers and elevation data.

The benchmark atop Telescope Peak, 11,043 feet above sea level, and the view back down into Death Valley, the lowest point in North America at 282 feet below sea level.

Many of the benchmarks I’ve encountered out west, including some of the markers in my own collection of photographs, date back to this era. The sheer scale of this undertaking was staggering. Survey teams, often working in harsh conditions, traveled across deserts, mountains, and plains, placing these markers at precisely measured locations.

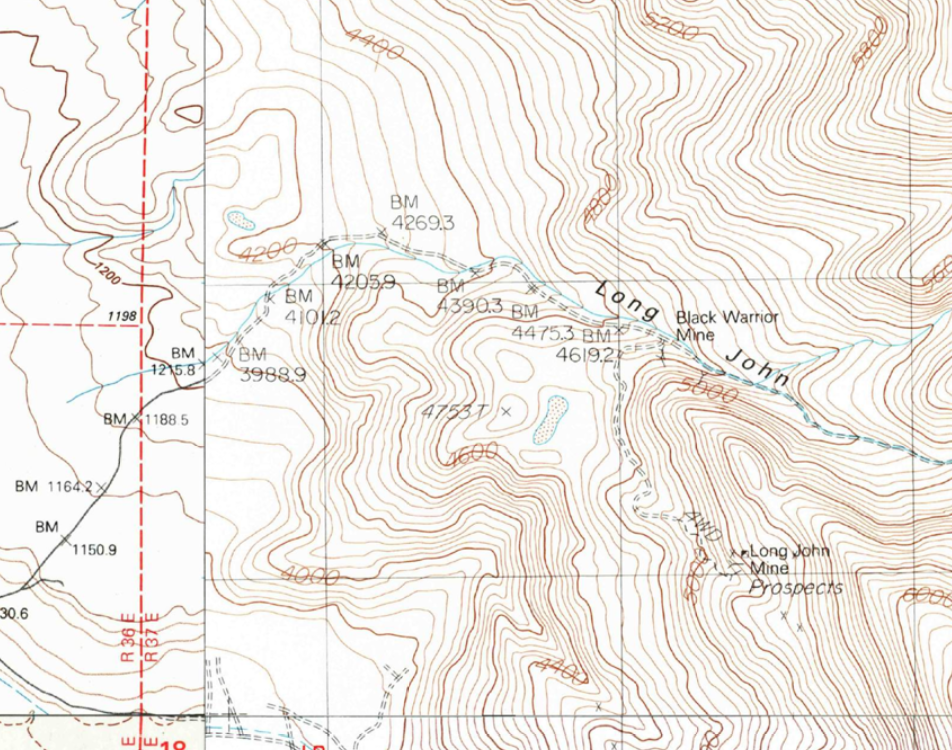

Back then, before satellites and lasers, surveyors measured the land with chains, not metaphorical ones, but actual metal links, each 66 feet long, dragged over hills and across dry lake beds. Two men, one at each end, stretching the chain tight, marking the spot, and then moving forward, leapfrogging their way across the landscape (in the below topo map, you can see how they created the road up Long John Canyon using this approach as each BM is a stop along that journey). It was slow, methodical work, made harder by wind, shifting sand, and the occasional rattlesnake. Distances weren’t just guessed; they were counted, 66 feet at a time, each measurement adding up to something that might one day become a railroad, a town, or just a name on a map. Even today, many of these benchmarks remain in use, their data incorporated into modern GPS systems.

USC&GS benchmark X44 one of about a dozen benchmarks along Long John Canyon in the Inyo Mountains, California. X44 isn't directly referred to on the USGS map, to correlate you need to take your latitude and longitude and figure out which one of those many BMs you were near. Before GPS, the ability to get your approximate location and then find an accurate benchmark was critical to know where you were for land survey purposes. In this X44 is BM 4205.9 on the map (that number indicates the elevation above sea level in feet but notice on the left hand join of the maps it's in metric - we've not been great about unit standardization!)

Using triangulation, these benchmarks formed an invisible web of reference points, allowing future surveyors to establish accurate elevations and positions anywhere in the country. Mining operations, railroad expansions, and large-scale infrastructure projects depended on these benchmarks. Without them, engineers would have had no reliable way to measure distances, ensure level grades for rail lines, or establish property boundaries with certainty.

Yet, just like in cybersecurity, these benchmarks required, and still require, ongoing maintenance and updates. Some have been lost to erosion, vandalism, or development. Others have been replaced by more precise, satellite-based measurements. However, the principle remains the same: without reliable benchmarks, whether in geography or in cybersecurity, we risk losing our way.

The Danger of Outdated or Misapplied Benchmarks

A benchmark is only as good as its accuracy. In the world of land surveying, a misplaced or deteriorated marker can introduce massive errors into maps, property lines, and infrastructure projects. The same is true in cybersecurity and benchmarks must be relevant, up-to-date, and applied correctly, or they create a false sense of security rather than a true measure of resilience.

Lost, Moved, or Forgotten: The Fate of Survey Benchmarks

Survey markers are meant to last, but time has other plans. Over the decades, many benchmarks from the early U.S. geodetic surveys have been lost to erosion, buried by development, or even stolen by collectors. Some have been misinterpreted, what was once a crucial reference point might now be mistaken for an old piece of trash, forgotten in the wilderness.

One example is the subsidence problem that affects benchmarks tied to elevation measurements. If the ground beneath a benchmark shifts due to earthquakes, mining activity, water extraction, or natural erosion, the elevation recorded at that point is no longer accurate. Anyone relying on that data without verification risks costly miscalculations.

Surveyors today don’t just trust old benchmarks blindly, they verify them. They cross-reference historical data with new measurements to ensure accuracy. Cybersecurity professionals need to take the same approach: just because a security benchmark was valid in 2015 doesn’t mean it still applies in 2025.

V44, a vertical control mark (VCM), was first set in 1950. Due to the continued pumping of groundwater by the Los Angeles Department of Water and Power, the ground has slumped noticeably in the Owens Valley requiring a VCM reset in 1984.

Cybersecurity Benchmarks That No Longer Hold Up

Cybersecurity benchmarks, like old survey markers, have a way of sticking around long after they’ve lost their usefulness. People follow them out of habit, not because they still make sense. The trouble is, an outdated benchmark doesn’t just fail to guide, it actively leads you in the wrong direction. Here are a few of my current favorites (also cue my shock that these are still on my list in 2025):

- The myth of the forced password reset. Once upon a time, security teams believed that making people change their passwords every 90 days would keep accounts safer. Instead, it just taught users to cycle through predictable variations (Password1, Password2, Password3), making attacks easier, not harder. We know better now, yet some companies still enforce the rule resulting in security theater at its finest.

- The firewall rules that time forgot. Some organizations are still guarding their networks as if it were 2005, relying on firewall rules designed for a world before zero trust and fileless malware. Attackers aren’t storming the gates anymore; they’re slipping through the side doors, disguising themselves as legitimate traffic. A firewall built for an old threat model is little more than a relic.

- Compliance as a false sense of security. There’s a dangerous belief that passing an ISO 27001 audit or getting a SOC 2 certification means an organization is secure. It doesn’t. It means they met a baseline, at a particular moment in time, under controlled conditions. Attackers don’t care about audit cycles.

Benchmarks only matter if they keep up with reality. A survey marker that’s lost to time, buried under a landslide, is just a rock. A cybersecurity benchmark that no longer reflects how threats work today is just a distraction. The real test isn’t whether a control once made sense, it’s whether it still gets you where you need to go.

The Consequences of Relying on Bad Benchmarks

Failing to keep benchmarks updated has consequences. In the physical world, bad survey data can result in:

- Property disputes due to inaccurate boundary lines.

- Infrastructure failures when engineers unknowingly rely on incorrect elevation or coordinate data.

- Environmental damage when projects disrupt areas mistakenly thought to be outside protected zones.

In cybersecurity, outdated benchmarks can lead to:

- Data breaches due to reliance on old security models.

- Regulatory fines when compliance frameworks are misinterpreted or not updated.

- Operational disruptions when security teams follow ancient checklists instead of actively engaging on emerging threats.

The lesson from both fields is clear: benchmarks are not one-and-done, they require maintenance, validation, and adaptation.

The Ever Important Witness Post

A benchmark, no matter how precisely placed, is only as reliable as its ability to be found and verified. Out in the desert, many benchmarks have been lost, not to time alone, but to neglect. The original marker may still be there, buried under shifting sand or obscured by new construction, but without a witness post, how would anyone know where to look?

Surveyors understand this problem well. That’s why they don’t just set benchmarks; they also install witness posts, brightly painted stakes or signs meant to draw attention to the benchmark’s location. These witness posts serve as a safeguard, ensuring that benchmarks remain visible, accessible, and properly referenced over time. A benchmark without a witness post is at risk of being forgotten, misinterpreted, or worse, ignored when it’s needed most.

Setting cybersecurity benchmarks is one thing but ensuring they are actively monitored and maintained is another. Just like an old survey marker can be rendered useless by time and shifting landscapes, a cybersecurity benchmark that isn’t consistently revisited can become irrelevant, misleading, or outright dangerous.

So, what serves as a witness post for your cybersecurity benchmarks? Here's a few for consideration:

- Continuous monitoring and validation: Just as surveyors periodically verify benchmark accuracy, cybersecurity teams must perform regular security assessments to confirm that their benchmarks still apply.

- Threat intelligence and adaptation: Relying on benchmarks that emphasize perimeter firewalls but fail to account for zero-trust architectures is like using a benchmark from the 1920s to build a modern highway, it’s just not going to get you where you need to go and fails to leverage the most current technology innovations.

- Stakeholder awareness and documentation: A security policy document no one reads is like a missing witness post: it may technically exist, but if no one can find or use it, it could just have well never been created.

- Learning from mistakes: A company that suffers a ransomware attack but doesn’t adjust its security benchmarks to account for lessons learned is doomed to repeat history.

Do Your Benchmarks Have Witness Posts?

This brings us back to the title of this post: do your benchmarks have witness posts?

In surveying, a benchmark without a witness post is at risk of disappearing into the shifting sands of the desert just as a cybersecurity benchmark without continuous care will suffer the same fate and end up buried under digital detritus. Setting a benchmark is not enough, it has to be maintained, revisited, and made visible to those who rely on it.

So, the next time you review your cybersecurity benchmarks, ask yourself:

- Are my security benchmarks still relevant to the threats I face?

- Do we have clear indicators to monitor and verify them over time?

- Do stakeholders know where to find them and how to use them?

If the answer to any of these is no, it’s time to add a witness post. Whether you’re roaming the desert like me, searching for an old survey marker, or protecting an enterprise from cyber threats, the real danger isn’t just having an outdated benchmark, it’s failing to ensure it can be found and used it when it matters most.

© 2025 Jack Hamm. All rights reserved.

All photos and content are protected under copyright law and may not be used or reproduced without explicit permission.